Project summary

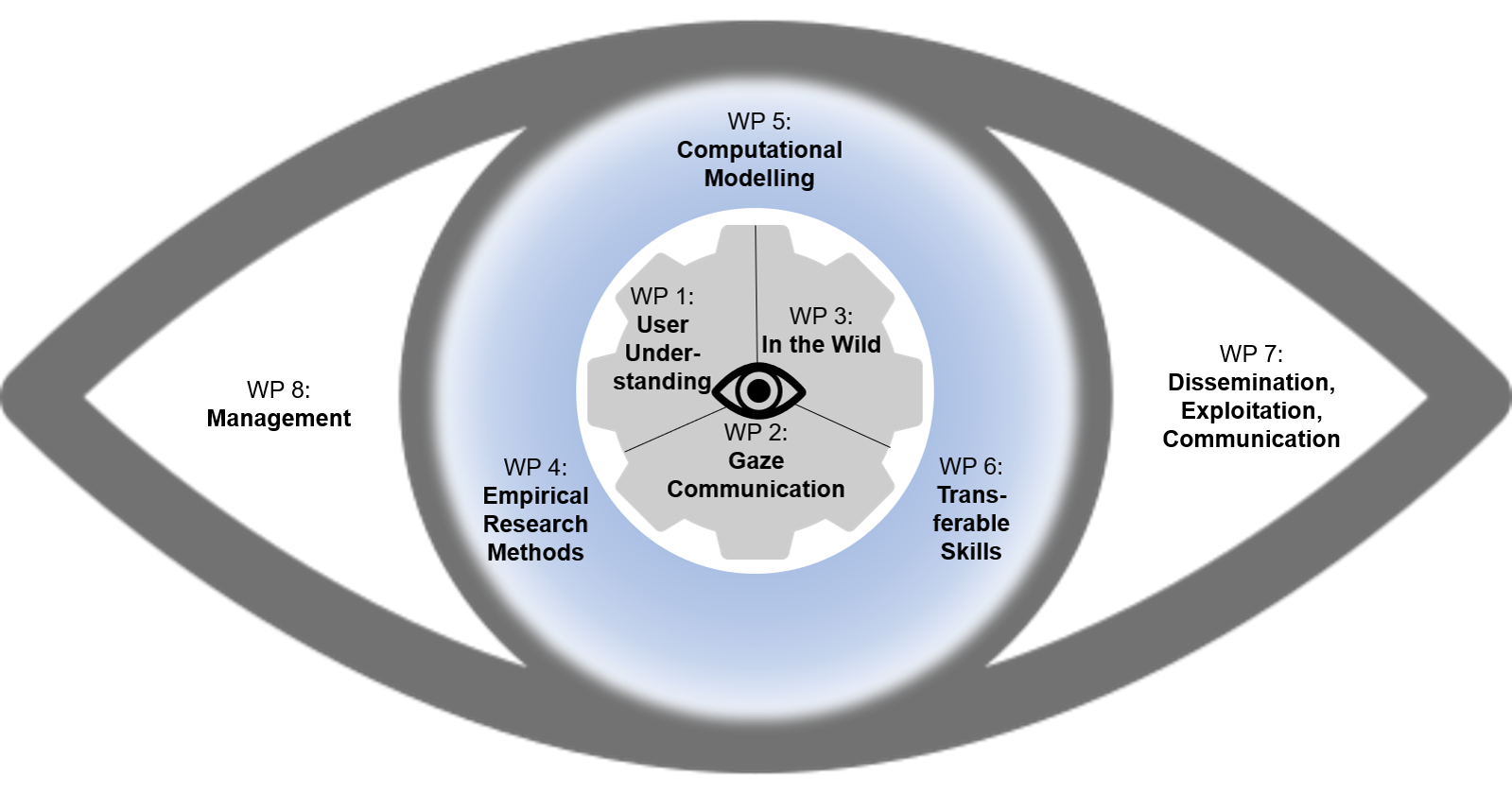

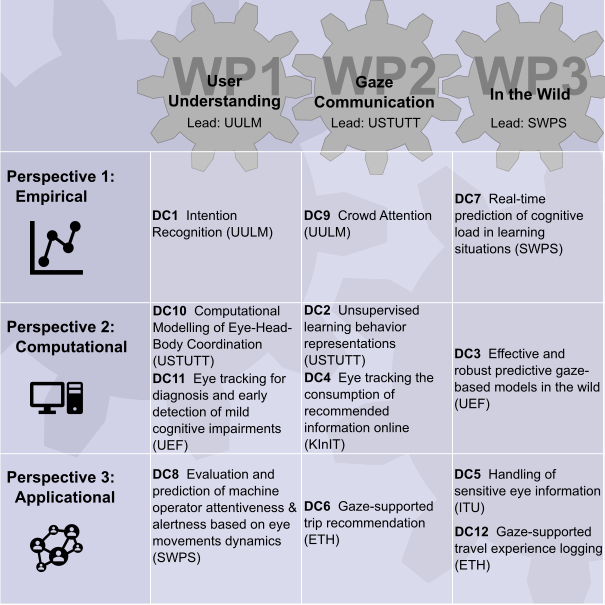

Gaze is an important communication channel to be captured remotely which works even without language. It thus holds great potential for universal inclusive technologies. Eyes for information, communication, and understanding (Eyes4ICU) explores novel forms of gaze interaction that rely on current psychological theories and findings, computational modeling, as well as expertise in highly promising application domains. Its approach of developing inclusive technology by tracing gaze interaction back to its cognitive and affective foundations (a psychological challenge) results in better models to predict user behavior (a computational challenge). By integrating insights in application fields, gaze-based interaction can be employed in the wild. Accordingly, the proposed research is divided into three work packages, namely Understanding Users (WP1), Gaze Communication (WP2), and In The Wild (WP3). All three work packages are pursued from three different perspectives: a psychological empirical perspective, a computational modeling perspective, and an application perspective, ensuring a unified and aligned progress and concept. Along these lines, training is also divided into three packages of Empirical Research Methods (WP4), Computational Modeling (WP5), and Transferable Skills (WP6). Consequently, the consortium is composed of groups working in psychological, computing, and application fields. All Beneficiaries are experts in using eye tracking in their respective areas ensuring best practices and optimal facilities for research and training. A variety of Associated Partners from the whole chain of eye tracking services ensures for applicability, practical relevance, and career opportunities by contributing to supervision, training, and research. This will advance communication by eye tracking as a field and result in European standards for gaze-based communication in a variety of domains disseminated through research and application.

PhD Projects

Overview

Description of individual PhD Projects

Objectives: Searching is one of the most important tasks in interacting with computers. Search assistance requires understanding the user's search intent. Based on earlier findings that pupil movements denote decisions (Strauch et al, 2019), and that gaze-based target selection benefits from pupil-assistance (PATS; Ehlers et al. 2018), the aim of the current PhD project is to systematically examine spatial and temporal relations of a variety of fixation-based and pupillometric eye-tracking parameters in a search task in order to identify critical parameters enabling to predict a search intent. Relevant parameters are investigated in a series of experimental lab studies in years 1 and 2 from a psychological perspective, and their influence is quantified. Thresholds, e.g. for pupil size or fixation duration, are individually determined via classical psychophysical methods. Based on these findings, an assistive search functionality will be conceived in the third year.

Doctoral Candidate: Valentin Foucher

Supervisor: Anke Huckauf

Host Institution: Ulm University, Germany

Objectives: The goal of this project is to pioneer computational methods to learn rich, general-purpose representations of everyday gaze behavior. This approach is inspired by recent advances in computational methods for learning contextualised representations of natural language, such as BERT or GPT. Similar to natural language understanding, a general-purpose gaze behavior representation has significant potential to improve a variety of downstream tasks, such as eye-based activity recognition or automatic gaze behavior analysis. Learning such representations, however, requires large amounts of training data. We plan to approach this by using a large number of already available eye tracking datasets (both short- and long-term, partly our own) and by complementing these, as required, with additional data collected ourselves. Using this data, we will then develop new methods to learn gaze representations in an unsupervised manner and evaluate them for different downstream gaze-based tasks. In the second half of the project, we will extend these newly developed methods to enable predictions jointly from gaze and other modalities, in particular egocentric video. Inspired by recent Transformer-based methods that have shown significant improvements on a variety of vision-and-language tasks, we will investigate vision-and-gaze tasks with broad relevance, such as visual exploration and decision making, and develop the first computational methods to address these. Together with our non-academic partner we will finally develop a method to introspect the learned gaze behavior representations.

Doctoral Candidate: Chuhan Jiao

Supervisor: Andreas Bulling

Host Institution: University of Stuttgart, Germany

Objectives: While theoretical and computational underpinnings have previously been explored towards gaze-based detection and prediction of user states, practical applicability in real life settings has not yet been explored. We will investigate some of the mostly explored gaze-based detection problems (skill/expertise, workload monitoring, intention detection, action detection) and build, replicate and test predictive models such that the effectiveness and robustness in real-life settings will be the primary objectives. This is important with regard to future applications of eye tracking as a ubiquitous sensor. A standardized benchmarking framework, reference hardware, and backend connectivity will be built and used, allowing careful examination of boundary conditions in terms of 1) computational power and transmission networks needed, 2) environmental stability, 3) accuracy and latency of the response.

Doctoral Candidate: Mohammadhossein Salari

Supervisor: Roman Bednarik

Host Institution: University of Eastern Finland, Finland

Objectives: Recommendation uses machine learning to predict users’ interest in the content from the users’ interaction, mostly clicks. However, there is a presentation bias (users tend to click more on the first items in the list) and self-selection bias (users tend to rate more items they like than the ones they dislike) in play in the observed data which negatively impacts the performance of the recommender systems. The objective of the project is to use remote eye tracking to: (1) model the users’ consumption of the recommended items and their interaction with the recommender systems, (2) debias the observed implicit feedback and (3) improve existing offline and online evaluation methodologies. From the eye tracking perspective, the challenge lies in the dynamic nature of the web interfaces where the recommendations are presented; content (targets) can change position over time or they can be overlayed by a different content; the project will therefore also contribute to the development of methods for mapping gaze to targets, while also accounting for the inaccuracies in the measurements that can arise from using eye tracking in an uncontrolled environment of the web.

Doctoral Candidate: Santiago de Leon-Martinez

Supervisors: Maria Bielikova, Robert Moro

Host Institution: Kempelen Institute of Intelligent Technologies, Slovakia

Objectives: The project aims to identify sensitive eye information and propose methods that allow real-time and stored eye information to be measured and used while ensuring legal-compliance. The goal is to work towards a fully GDPR (General Data Protection Regulation) compliant Eye Information pipeline that balances utility and security. There exist no fully GDPR compliant eye trackers or eye movement databases. While current methods only partially ensure GDPR compliance e.g. identity, an overview of which sensitive information is measurable with an eye tracker is needed to improve GDPR compliance.

Doctoral Candidate: David Kovacs

Supervisor: Dan Witzner Hansen

Host Institution: IT University Copenhagen, Denmark

Objectives: To generate trip recommendations for gaze-guided tours based on the experiences of multiple users. These recommendations will provide users with information on which places to visit and objects of interest. To record and annotate trip experience for specific places and objects of interest based on gaze, video, location, and physiological signatures (e.g., heart rate) in Virtual Reality (VR). To train and validate a real time classifier for trip experiences that is capable of stream processing the data during recording. To develop a recommender system that is able to suggest individual trips based on collective experiences. Such a system is capable of considering the user’s changing state and adapt the recommendations in real time. To evaluate the user experience of the recommender system with a user study.

Doctoral Candidate: Lin Che

Supervisor: Peter Kiefer

Host Institution: Eidesgenössische Technische Hochschule Zürich, Switzerland

Objectives: To develop an innovative method for real-time monitoring of cognitive load in the context of learning. The method will identify focal and ambient modes of visual information processing (measured by e.g., K-coefficient) coupled with pupillary activity (measured by e.g., LHIPA index). DC7 will develop predictive machine learning models and algorithms for estimating the cognitive overload of an individual student during learning based on their pupillary activity. This novel approach assumes that cognitive overload is most prone to occur during focal stages of visual information processing. In a series of eye tracking, ecologically valid, educational experiments we will test the reliability and sensitivity of this method. Cognitive load will be assessed online to provide real-time feedback to students and teachers. At the same time, subtle modulations to multimedia learning environments will be executed to implicitly lower students’ cognitive load. Information about individual levels of students' cognitive load will be signaled to their teachers who could react to lower students’ overload. The real-time visualization of gaze and cognitive state estimated from dynamics of eye movements will be developed in collaboration with DC9. The project enhances knowledge of cognitive load dynamics during learning. Furthermore, it will provide clear recommendations for teachers and educational practitioners and a prototype of gaze-based machine learning educational tools to assess cognitive load. It will also address the issue of how the information about the student cognitive state should be communicated in-the-wild to teachers with the compliance of GDPR. The issue of how to communicate and exchange the information about the cognitive load between students and instructor with compliance to GDPR rules will be complementary to DC5.

Doctoral Candidate: Merve Ekin

Supervisors: Izabela Krejtz, Krzysztof Krejtz

Host Institution: SWPS University of Social Sciences and Humanities, Poland

Objectives: To depict heavy-duty vehicles operators’ loss of attention by monitoring dynamics of ambient and focal visual information processing and their gaze entropy while operating vehicles. Based on characteristics of gaze scanpaths, DC8 will develop a model distinguishing between ambient stages with high entropy (attention loss) and focal stages with low entropy of the attention process. The model will triangulate gaze-based metrics with other psychophysiological measurements related to attentiveness and alertness, e.g., heart rate variability. Complemented by in-lab experiments, simulator studies will be used to quantitatively and qualitatively examine attention in heavy-duty machines operating simulator, DC8 will examine the relationship between ambient-focal visual information processing, gaze entropy, and potential consequences of hazard operators’ behavior due to operators’ loss of attention.

Doctoral Candidate: Filippo Baldisserotto

Supervisors: Izabela Krejtz, Krzysztof Krejtz

Host Institution: SWPS University of Social Sciences and Humanities, Poland

Objectives: Giving talks, e.g. in teaching, requires managing the attention of an audience. Information of this so-called crowd attention, which is known to be assessed by monitoring the gaze behavior of the audience in real time by the speaker, is unavailable in online/distance settings. To assist speakers in assessing and managing the crowd attention during talks, gaze targets of an audience have to be observed, visualizations of crowd attention are then to be developed, implemented and investigated in user studies comprising research in the lab and in real and online classrooms. A joint study with DC7 enables identification of important parameters to be visualized. If successful, applying visualizations of crowd attention must have implications on privacy; i.e. on the subjective impression of being observed. These might even help users in other contexts, e.g., as a warning signal during usage of social media. This innovative application area will be further elaborated, in close exchange with DC5, and examined on the background of changes in behavior in private and in public settings.

Doctoral Candidate: Mehtap Çakır

Supervisor: Anke Huckauf

Host Institution: Ulm University, Germany

Objectives: Human behaviour, e.g. while performing everyday activities or during social or navigational tasks, is the result of a complex interplay between eye, head, and body movements. These movements are not only closely coordinated with each other but also geared to the task at hand and are performed adaptively and pro-actively. While a large body of work has developed computational models for individual body parts, or dual combinations, holistic computational modelling of human behaviour remains under-explored. The goal of this project is pioneer computational models of human behaviour that, for the first time, tightly integrate information on eye, head, and body movements. The successful candidate will develop computer vision and machine learning methods to sense and learn models of human behaviour and will evaluate them for different behaviour-related tasks, such as activity recognition or forecasting, as well as during social encounters.

Doctoral Candidate: Huajian Qiu

Supervisor: Andreas Bulling

Host Institution: University of Stuttgart, Germany

Objectives: Mild Cognitive Impairments are often diagnosed as a prerequisite to the full diagnosis of Alzheimer’s disease (AD) and leading ultimately to dementia with wide-ranging functional defects. Eye movements have previously been reported as potential markers of AD. Recent studies have demonstrated disturbed eye movements (saccades and fixations) and reading problems already in persons with mild cognitive impairments (MCI). Detecting abnormal eye movements and reading problems may offer easy-to-use methods to find the persons at risk of MCI and AD when clear clinical symptoms are still lacking. While existing studies were performed in well controlled laboratory conditions, in this package we will investigate the applications of eye-tracking for detection of MCI in the wild, that is, at homes and in clinical examination rooms. We do have access to already existing datasets recorded in controlled conditions, and collaborating patients, and therefore the project will have a fast start and does not need to wait for data collection activities. However, DC11 will contribute to the collection of clinically valid dataset collections.

Doctoral Candidate: Hasnain Ali Shah

Supervisor: Roman Bednarik

Host Institution: University of Eastern Finland, Finland

Objectives: Experience logging techniques can contribute to improving personal reflection (e.g., well-being, performance) and sharing of experiences (e.g., using social media). The idea of the project is to enhance experience logging with gaze in order to help the user reflect about her experiences of moving through some spatial setting. Since travelers are particularly exposed to novel experiences, tourism is chosen as an example domain. The goals of the project are therefore: 1) To design novel approaches for gaze-supported travel experience logging that can be used for improving personal reflection and sharing of experiences. In general, these approaches could be applied in tourism, therapy, and behavior analysis. 2) To log travel data in the wild, including gaze, video, GPS, and physiological signatures (e.g., heart rate). These will be complemented with data from questionnaires (during travel) and a visual support tool (after travel) used to annotate critical events (e.g., changes in emotion and preferences). 3) To train and validate automatic classifiers for critical events based on different features of the collected data and the respective annotations. 4) To develop a visual support tool that provides spatio-temporal summaries and visualizations of the collected data. These summaries are essential for personal reflection and dissemination. Here, the classifier will complement the visual support tool by providing event categories for non-annotated data. The visual support can be applied to improve classifier training (e.g., by active learning).

Doctoral Candidate: Yiwei Wang

Supervisor: Peter Kiefer

Host Institution: Eidesgenössische Technische Hochschule Zürich, Switzerland